diff --git a/.cspell.yml b/.cspell.yml

new file mode 100644

index 000000000..f56756a87

--- /dev/null

+++ b/.cspell.yml

@@ -0,0 +1,6 @@

+ignoreWords:

+ - childs # This spelling is used in the files command

+ - NodeCreater # This spelling is used in the fuse dependency

+ - Boddy # One of the contributors to the project - Chris Boddy

+ - Botto # One of the contributors to the project - Santiago Botto

+ - cose # dag-cose

\ No newline at end of file

diff --git a/.gitattributes b/.gitattributes

index 831606f19..280c95af2 100644

--- a/.gitattributes

+++ b/.gitattributes

@@ -15,3 +15,23 @@ LICENSE text eol=auto

# Binary assets

assets/init-doc/* binary

core/coreunix/test_data/** binary

+test/cli/migrations/testdata/** binary

+

+# Generated test data

+test/cli/migrations/testdata/** linguist-generated=true

+test/cli/autoconf/testdata/** linguist-generated=true

+test/cli/fixtures/** linguist-generated=true

+test/sharness/t0054-dag-car-import-export-data/** linguist-generated=true

+test/sharness/t0109-gateway-web-_redirects-data/** linguist-generated=true

+test/sharness/t0114-gateway-subdomains/** linguist-generated=true

+test/sharness/t0115-gateway-dir-listing/** linguist-generated=true

+test/sharness/t0116-gateway-cache/** linguist-generated=true

+test/sharness/t0119-prometheus-data/** linguist-generated=true

+test/sharness/t0165-keystore-data/** linguist-generated=true

+test/sharness/t0275-cid-security-data/** linguist-generated=true

+test/sharness/t0280-plugin-dag-jose-data/** linguist-generated=true

+test/sharness/t0280-plugin-data/** linguist-generated=true

+test/sharness/t0280-plugin-git-data/** linguist-generated=true

+test/sharness/t0400-api-no-gateway/** linguist-generated=true

+test/sharness/t0701-delegated-routing-reframe/** linguist-generated=true

+test/sharness/t0702-delegated-routing-http/** linguist-generated=true

diff --git a/.github/ISSUE_TEMPLATE/bug-report.yml b/.github/ISSUE_TEMPLATE/bug-report.yml

index b0d0d1f0d..d89f921b8 100644

--- a/.github/ISSUE_TEMPLATE/bug-report.yml

+++ b/.github/ISSUE_TEMPLATE/bug-report.yml

@@ -32,8 +32,9 @@ body:

label: Installation method

description: Please select your installation method

options:

+ - dist.ipfs.tech or ipfs-update

+ - docker image

- ipfs-desktop

- - ipfs-update or dist.ipfs.tech

- third-party binary

- built from source

- type: textarea

diff --git a/.github/ISSUE_TEMPLATE/enhancement.yml b/.github/ISSUE_TEMPLATE/enhancement.yml

index a0b241b55..d2f7a9205 100644

--- a/.github/ISSUE_TEMPLATE/enhancement.yml

+++ b/.github/ISSUE_TEMPLATE/enhancement.yml

@@ -2,6 +2,7 @@ name: Enhancement

description: Suggest an improvement to an existing kubo feature.

labels:

- kind/enhancement

+ - need/triage

body:

- type: markdown

attributes:

diff --git a/.github/ISSUE_TEMPLATE/feature.yml b/.github/ISSUE_TEMPLATE/feature.yml

index d368588b4..77445f29f 100644

--- a/.github/ISSUE_TEMPLATE/feature.yml

+++ b/.github/ISSUE_TEMPLATE/feature.yml

@@ -2,6 +2,7 @@ name: Feature

description: Suggest a new feature in Kubo.

labels:

- kind/feature

+ - need/triage

body:

- type: markdown

attributes:

diff --git a/.github/build-platforms.yml b/.github/build-platforms.yml

new file mode 100644

index 000000000..456489e60

--- /dev/null

+++ b/.github/build-platforms.yml

@@ -0,0 +1,17 @@

+# Build platforms configuration for Kubo

+# Matches https://github.com/ipfs/distributions/blob/master/dists/kubo/build_matrix

+# plus linux-riscv64 for emerging architecture support

+#

+# The Go compiler handles FUSE support automatically via build tags.

+# Platforms are simply listed - no need to specify FUSE capability.

+

+platforms:

+ - darwin-amd64

+ - darwin-arm64

+ - freebsd-amd64

+ - linux-amd64

+ - linux-arm64

+ - linux-riscv64

+ - openbsd-amd64

+ - windows-amd64

+ - windows-arm64

\ No newline at end of file

diff --git a/.github/workflows/codeql-analysis.yml b/.github/workflows/codeql-analysis.yml

index d0e082d65..f1acf21e0 100644

--- a/.github/workflows/codeql-analysis.yml

+++ b/.github/workflows/codeql-analysis.yml

@@ -29,21 +29,21 @@ jobs:

steps:

- name: Checkout repository

- uses: actions/checkout@v4

+ uses: actions/checkout@v6

- name: Setup Go

- uses: actions/setup-go@v5

+ uses: actions/setup-go@v6

with:

- go-version: 1.22.x

+ go-version-file: 'go.mod'

# Initializes the CodeQL tools for scanning.

- name: Initialize CodeQL

- uses: github/codeql-action/init@v3

+ uses: github/codeql-action/init@v4

with:

languages: go

- name: Autobuild

- uses: github/codeql-action/autobuild@v3

+ uses: github/codeql-action/autobuild@v4

- name: Perform CodeQL Analysis

- uses: github/codeql-action/analyze@v3

+ uses: github/codeql-action/analyze@v4

diff --git a/.github/workflows/docker-build.yml b/.github/workflows/docker-build.yml

deleted file mode 100644

index 433240f42..000000000

--- a/.github/workflows/docker-build.yml

+++ /dev/null

@@ -1,34 +0,0 @@

-# If we decide to run build-image.yml on every PR, we could deprecate this workflow.

-name: Docker Build

-

-on:

- workflow_dispatch:

- pull_request:

- paths-ignore:

- - '**/*.md'

- push:

- branches:

- - 'master'

-

-concurrency:

- group: ${{ github.workflow }}-${{ github.event_name }}-${{ github.event_name == 'push' && github.sha || github.ref }}

- cancel-in-progress: true

-

-jobs:

- docker-build:

- if: github.repository == 'ipfs/kubo' || github.event_name == 'workflow_dispatch'

- runs-on: ubuntu-latest

- timeout-minutes: 10

- env:

- IMAGE_NAME: ipfs/kubo

- WIP_IMAGE_TAG: wip

- defaults:

- run:

- shell: bash

- steps:

- - uses: actions/setup-go@v5

- with:

- go-version: 1.22.x

- - uses: actions/checkout@v4

- - run: docker build -t $IMAGE_NAME:$WIP_IMAGE_TAG .

- - run: docker run --rm $IMAGE_NAME:$WIP_IMAGE_TAG --version

diff --git a/.github/workflows/docker-check.yml b/.github/workflows/docker-check.yml

new file mode 100644

index 000000000..884155050

--- /dev/null

+++ b/.github/workflows/docker-check.yml

@@ -0,0 +1,62 @@

+# This workflow performs a quick Docker build check on PRs and pushes to master.

+# It builds the Docker image and runs a basic smoke test to ensure the image works.

+# This is a lightweight check - for full multi-platform builds and publishing, see docker-image.yml

+name: Docker Check

+

+on:

+ workflow_dispatch:

+ pull_request:

+ paths-ignore:

+ - '**/*.md'

+ push:

+ branches:

+ - 'master'

+

+concurrency:

+ group: ${{ github.workflow }}-${{ github.event_name }}-${{ github.event_name == 'push' && github.sha || github.ref }}

+ cancel-in-progress: true

+

+jobs:

+ lint:

+ if: github.repository == 'ipfs/kubo' || github.event_name == 'workflow_dispatch'

+ runs-on: ubuntu-latest

+ timeout-minutes: 5

+ steps:

+ - uses: actions/checkout@v6

+ - uses: hadolint/hadolint-action@v3.3.0

+ with:

+ dockerfile: Dockerfile

+ failure-threshold: warning

+ verbose: true

+ format: tty

+

+ build:

+ if: github.repository == 'ipfs/kubo' || github.event_name == 'workflow_dispatch'

+ runs-on: ubuntu-latest

+ timeout-minutes: 10

+ env:

+ IMAGE_NAME: ipfs/kubo

+ WIP_IMAGE_TAG: wip

+ defaults:

+ run:

+ shell: bash

+ steps:

+ - uses: actions/checkout@v6

+

+ - name: Set up Docker Buildx

+ uses: docker/setup-buildx-action@v3

+

+ - name: Build Docker image with BuildKit

+ uses: docker/build-push-action@v6

+ with:

+ context: .

+ push: false

+ load: true

+ tags: ${{ env.IMAGE_NAME }}:${{ env.WIP_IMAGE_TAG }}

+ cache-from: |

+ type=gha

+ type=registry,ref=${{ env.IMAGE_NAME }}:buildcache

+ cache-to: type=gha,mode=max

+

+ - name: Test Docker image

+ run: docker run --rm $IMAGE_NAME:$WIP_IMAGE_TAG --version

diff --git a/.github/workflows/docker-image.yml b/.github/workflows/docker-image.yml

index f5642fe6d..39eaf52f4 100644

--- a/.github/workflows/docker-image.yml

+++ b/.github/workflows/docker-image.yml

@@ -1,3 +1,7 @@

+# This workflow builds and publishes official Docker images to Docker Hub.

+# It handles multi-platform builds (amd64, arm/v7, arm64/v8) and pushes tagged releases.

+# This workflow is triggered on tags, specific branches, and can be manually dispatched.

+# For quick build checks during development, see docker-check.yml

name: Docker Push

on:

@@ -19,6 +23,7 @@ on:

push:

branches:

- 'master'

+ - 'staging'

- 'bifrost-*'

tags:

- 'v*'

@@ -31,13 +36,14 @@ jobs:

if: github.repository == 'ipfs/kubo' || github.event_name == 'workflow_dispatch'

name: Push Docker image to Docker Hub

runs-on: ubuntu-latest

- timeout-minutes: 90

+ timeout-minutes: 15

env:

IMAGE_NAME: ipfs/kubo

- LEGACY_IMAGE_NAME: ipfs/go-ipfs

+ outputs:

+ tags: ${{ steps.tags.outputs.value }}

steps:

- name: Check out the repo

- uses: actions/checkout@v4

+ uses: actions/checkout@v6

- name: Set up QEMU

uses: docker/setup-qemu-action@v3

@@ -45,13 +51,11 @@ jobs:

- name: Set up Docker Buildx

uses: docker/setup-buildx-action@v3

- - name: Cache Docker layers

- uses: actions/cache@v4

+ - name: Log in to Docker Hub

+ uses: docker/login-action@v3

with:

- path: /tmp/.buildx-cache

- key: ${{ runner.os }}-buildx-${{ github.sha }}

- restore-keys: |

- ${{ runner.os }}-buildx-

+ username: ${{ vars.DOCKER_USERNAME }}

+ password: ${{ secrets.DOCKER_PASSWORD }}

- name: Get tags

id: tags

@@ -62,12 +66,6 @@ jobs:

echo "EOF" >> $GITHUB_OUTPUT

shell: bash

- - name: Log in to Docker Hub

- uses: docker/login-action@v3

- with:

- username: ${{ vars.DOCKER_USERNAME }}

- password: ${{ secrets.DOCKER_PASSWORD }}

-

# We have to build each platform separately because when using multi-arch

# builds, only one platform is being loaded into the cache. This would

# prevent us from testing the other platforms.

@@ -80,8 +78,10 @@ jobs:

load: true

file: ./Dockerfile

tags: ${{ env.IMAGE_NAME }}:linux-amd64

- cache-from: type=local,src=/tmp/.buildx-cache

- cache-to: type=local,dest=/tmp/.buildx-cache-new

+ cache-from: |

+ type=gha

+ type=registry,ref=${{ env.IMAGE_NAME }}:buildcache

+ cache-to: type=gha,mode=max

- name: Build Docker image (linux/arm/v7)

uses: docker/build-push-action@v6

@@ -92,8 +92,10 @@ jobs:

load: true

file: ./Dockerfile

tags: ${{ env.IMAGE_NAME }}:linux-arm-v7

- cache-from: type=local,src=/tmp/.buildx-cache

- cache-to: type=local,dest=/tmp/.buildx-cache-new

+ cache-from: |

+ type=gha

+ type=registry,ref=${{ env.IMAGE_NAME }}:buildcache

+ cache-to: type=gha,mode=max

- name: Build Docker image (linux/arm64/v8)

uses: docker/build-push-action@v6

@@ -104,14 +106,24 @@ jobs:

load: true

file: ./Dockerfile

tags: ${{ env.IMAGE_NAME }}:linux-arm64-v8

- cache-from: type=local,src=/tmp/.buildx-cache

- cache-to: type=local,dest=/tmp/.buildx-cache-new

+ cache-from: |

+ type=gha

+ type=registry,ref=${{ env.IMAGE_NAME }}:buildcache

+ cache-to: type=gha,mode=max

# We test all the images on amd64 host here. This uses QEMU to emulate

# the other platforms.

- - run: docker run --rm $IMAGE_NAME:linux-amd64 --version

- - run: docker run --rm $IMAGE_NAME:linux-arm-v7 --version

- - run: docker run --rm $IMAGE_NAME:linux-arm64-v8 --version

+ # NOTE: --version should finish instantly, but sometimes

+ # it hangs on github CI (could be qemu issue), so we retry to remove false negatives

+ - name: Smoke-test linux-amd64

+ run: for i in {1..3}; do timeout 15s docker run --rm $IMAGE_NAME:linux-amd64 version --all && break || [ $i = 3 ] && exit 1; done

+ timeout-minutes: 1

+ - name: Smoke-test linux-arm-v7

+ run: for i in {1..3}; do timeout 15s docker run --rm $IMAGE_NAME:linux-arm-v7 version --all && break || [ $i = 3 ] && exit 1; done

+ timeout-minutes: 1

+ - name: Smoke-test linux-arm64-v8

+ run: for i in {1..3}; do timeout 15s docker run --rm $IMAGE_NAME:linux-arm64-v8 version --all && break || [ $i = 3 ] && exit 1; done

+ timeout-minutes: 1

# This will only push the previously built images.

- if: github.event_name != 'workflow_dispatch' || github.event.inputs.push == 'true'

@@ -123,12 +135,9 @@ jobs:

push: true

file: ./Dockerfile

tags: "${{ github.event.inputs.tags || steps.tags.outputs.value }}"

- cache-from: type=local,src=/tmp/.buildx-cache-new

- cache-to: type=local,dest=/tmp/.buildx-cache-new

-

- # https://github.com/docker/build-push-action/issues/252

- # https://github.com/moby/buildkit/issues/1896

- - name: Move cache to limit growth

- run: |

- rm -rf /tmp/.buildx-cache

- mv /tmp/.buildx-cache-new /tmp/.buildx-cache

+ cache-from: |

+ type=gha

+ type=registry,ref=${{ env.IMAGE_NAME }}:buildcache

+ cache-to: |

+ type=gha,mode=max

+ type=registry,ref=${{ env.IMAGE_NAME }}:buildcache,mode=max

diff --git a/.github/workflows/gateway-conformance.yml b/.github/workflows/gateway-conformance.yml

index b1791868c..f2cd854c5 100644

--- a/.github/workflows/gateway-conformance.yml

+++ b/.github/workflows/gateway-conformance.yml

@@ -41,22 +41,21 @@ jobs:

steps:

# 1. Download the gateway-conformance fixtures

- name: Download gateway-conformance fixtures

- uses: ipfs/gateway-conformance/.github/actions/extract-fixtures@v0.6

+ uses: ipfs/gateway-conformance/.github/actions/extract-fixtures@v0.8

with:

output: fixtures

# 2. Build the kubo-gateway

- - name: Setup Go

- uses: actions/setup-go@v5

- with:

- go-version: 1.22.x

- - uses: protocol/cache-go-action@v1

- with:

- name: ${{ github.job }}

- name: Checkout kubo-gateway

- uses: actions/checkout@v4

+ uses: actions/checkout@v6

with:

path: kubo-gateway

+ - name: Setup Go

+ uses: actions/setup-go@v6

+ with:

+ go-version-file: 'kubo-gateway/go.mod'

+ cache: true

+ cache-dependency-path: kubo-gateway/go.sum

- name: Build kubo-gateway

run: make build

working-directory: kubo-gateway

@@ -94,7 +93,7 @@ jobs:

# 6. Run the gateway-conformance tests

- name: Run gateway-conformance tests

- uses: ipfs/gateway-conformance/.github/actions/test@v0.6

+ uses: ipfs/gateway-conformance/.github/actions/test@v0.8

with:

gateway-url: http://127.0.0.1:8080

subdomain-url: http://localhost:8080

@@ -110,13 +109,13 @@ jobs:

run: cat output.md >> $GITHUB_STEP_SUMMARY

- name: Upload HTML report

if: failure() || success()

- uses: actions/upload-artifact@v4

+ uses: actions/upload-artifact@v6

with:

name: gateway-conformance.html

path: output.html

- name: Upload JSON report

if: failure() || success()

- uses: actions/upload-artifact@v4

+ uses: actions/upload-artifact@v6

with:

name: gateway-conformance.json

path: output.json

@@ -128,22 +127,21 @@ jobs:

steps:

# 1. Download the gateway-conformance fixtures

- name: Download gateway-conformance fixtures

- uses: ipfs/gateway-conformance/.github/actions/extract-fixtures@v0.6

+ uses: ipfs/gateway-conformance/.github/actions/extract-fixtures@v0.8

with:

output: fixtures

# 2. Build the kubo-gateway

- - name: Setup Go

- uses: actions/setup-go@v5

- with:

- go-version: 1.22.x

- - uses: protocol/cache-go-action@v1

- with:

- name: ${{ github.job }}

- name: Checkout kubo-gateway

- uses: actions/checkout@v4

+ uses: actions/checkout@v6

with:

path: kubo-gateway

+ - name: Setup Go

+ uses: actions/setup-go@v6

+ with:

+ go-version-file: 'kubo-gateway/go.mod'

+ cache: true

+ cache-dependency-path: kubo-gateway/go.sum

- name: Build kubo-gateway

run: make build

working-directory: kubo-gateway

@@ -201,7 +199,7 @@ jobs:

# 9. Run the gateway-conformance tests over libp2p

- name: Run gateway-conformance tests over libp2p

- uses: ipfs/gateway-conformance/.github/actions/test@v0.6

+ uses: ipfs/gateway-conformance/.github/actions/test@v0.8

with:

gateway-url: http://127.0.0.1:8092

args: --specs "trustless-gateway,-trustless-ipns-gateway" -skip 'TestGatewayCar/GET_response_for_application/vnd.ipld.car/Header_Content-Length'

@@ -216,13 +214,13 @@ jobs:

run: cat output.md >> $GITHUB_STEP_SUMMARY

- name: Upload HTML report

if: failure() || success()

- uses: actions/upload-artifact@v4

+ uses: actions/upload-artifact@v6

with:

name: gateway-conformance-libp2p.html

path: output.html

- name: Upload JSON report

if: failure() || success()

- uses: actions/upload-artifact@v4

+ uses: actions/upload-artifact@v6

with:

name: gateway-conformance-libp2p.json

path: output.json

diff --git a/.github/workflows/generated-pr.yml b/.github/workflows/generated-pr.yml

new file mode 100644

index 000000000..b8c5cc631

--- /dev/null

+++ b/.github/workflows/generated-pr.yml

@@ -0,0 +1,14 @@

+name: Close Generated PRs

+

+on:

+ schedule:

+ - cron: '0 0 * * *'

+ workflow_dispatch:

+

+permissions:

+ issues: write

+ pull-requests: write

+

+jobs:

+ stale:

+ uses: ipdxco/unified-github-workflows/.github/workflows/reusable-generated-pr.yml@v1

diff --git a/.github/workflows/gobuild.yml b/.github/workflows/gobuild.yml

index 93159eadd..5134f1cd1 100644

--- a/.github/workflows/gobuild.yml

+++ b/.github/workflows/gobuild.yml

@@ -21,20 +21,38 @@ jobs:

env:

TEST_DOCKER: 0

TEST_VERBOSE: 1

- TRAVIS: 1

GIT_PAGER: cat

IPFS_CHECK_RCMGR_DEFAULTS: 1

defaults:

run:

shell: bash

steps:

- - uses: actions/setup-go@v5

+ - uses: actions/checkout@v6

+ - uses: actions/setup-go@v6

with:

- go-version: 1.22.x

- - uses: actions/checkout@v4

- - run: make cmd/ipfs-try-build

- env:

- TEST_FUSE: 1

- - run: make cmd/ipfs-try-build

- env:

- TEST_FUSE: 0

+ go-version-file: 'go.mod'

+ cache: true

+ cache-dependency-path: go.sum

+

+ - name: Build all platforms

+ run: |

+ # Read platforms from build-platforms.yml and build each one

+ echo "Building kubo for all platforms..."

+

+ # Read and build each platform

+ grep '^ - ' .github/build-platforms.yml | sed 's/^ - //' | while read -r platform; do

+ if [ -z "$platform" ]; then

+ continue

+ fi

+

+ echo "::group::Building $platform"

+ GOOS=$(echo "$platform" | cut -d- -f1)

+ GOARCH=$(echo "$platform" | cut -d- -f2)

+

+ echo "Building $platform"

+ echo " GOOS=$GOOS GOARCH=$GOARCH go build -o /dev/null ./cmd/ipfs"

+ GOOS=$GOOS GOARCH=$GOARCH go build -o /dev/null ./cmd/ipfs

+ echo "::endgroup::"

+ done

+

+ echo "All platforms built successfully"

\ No newline at end of file

diff --git a/.github/workflows/golang-analysis.yml b/.github/workflows/golang-analysis.yml

index e89034a92..77bd9c3da 100644

--- a/.github/workflows/golang-analysis.yml

+++ b/.github/workflows/golang-analysis.yml

@@ -22,12 +22,12 @@ jobs:

runs-on: ubuntu-latest

timeout-minutes: 10

steps:

- - uses: actions/checkout@v4

+ - uses: actions/checkout@v6

with:

submodules: recursive

- - uses: actions/setup-go@v5

+ - uses: actions/setup-go@v6

with:

- go-version: "1.22.x"

+ go-version-file: 'go.mod'

- name: Check that go.mod is tidy

uses: protocol/multiple-go-modules@v1.4

with:

diff --git a/.github/workflows/golint.yml b/.github/workflows/golint.yml

index aa8b21b53..a68d0c126 100644

--- a/.github/workflows/golint.yml

+++ b/.github/workflows/golint.yml

@@ -22,15 +22,14 @@ jobs:

TEST_DOCKER: 0

TEST_FUSE: 0

TEST_VERBOSE: 1

- TRAVIS: 1

GIT_PAGER: cat

IPFS_CHECK_RCMGR_DEFAULTS: 1

defaults:

run:

shell: bash

steps:

- - uses: actions/setup-go@v5

+ - uses: actions/checkout@v6

+ - uses: actions/setup-go@v6

with:

- go-version: 1.22.x

- - uses: actions/checkout@v4

+ go-version-file: 'go.mod'

- run: make -O test_go_lint

diff --git a/.github/workflows/gotest.yml b/.github/workflows/gotest.yml

index 609791aba..8165eb12a 100644

--- a/.github/workflows/gotest.yml

+++ b/.github/workflows/gotest.yml

@@ -14,64 +14,42 @@ concurrency:

cancel-in-progress: true

jobs:

- go-test:

+ # Unit tests with coverage collection (uploaded to Codecov)

+ unit-tests:

if: github.repository == 'ipfs/kubo' || github.event_name == 'workflow_dispatch'

runs-on: ${{ fromJSON(github.repository == 'ipfs/kubo' && '["self-hosted", "linux", "x64", "2xlarge"]' || '"ubuntu-latest"') }}

- timeout-minutes: 20

+ timeout-minutes: 15

env:

+ GOTRACEBACK: single # reduce noise on test timeout panics

TEST_DOCKER: 0

TEST_FUSE: 0

TEST_VERBOSE: 1

- TRAVIS: 1

GIT_PAGER: cat

IPFS_CHECK_RCMGR_DEFAULTS: 1

defaults:

run:

shell: bash

steps:

- - name: Set up Go

- uses: actions/setup-go@v5

- with:

- go-version: 1.22.x

- name: Check out Kubo

- uses: actions/checkout@v4

+ uses: actions/checkout@v6

+ - name: Set up Go

+ uses: actions/setup-go@v6

+ with:

+ go-version-file: 'go.mod'

- name: Install missing tools

run: sudo apt update && sudo apt install -y zsh

- - name: 👉️ If this step failed, go to «Summary» (top left) → inspect the «Failures/Errors» table

- env:

- # increasing parallelism beyond 2 doesn't speed up the tests much

- PARALLEL: 2

+ - name: Run unit tests

run: |

- make -j "$PARALLEL" test/unit/gotest.junit.xml &&

+ make test_unit &&

[[ ! $(jq -s -c 'map(select(.Action == "fail")) | .[]' test/unit/gotest.json) ]]

- name: Upload coverage to Codecov

- uses: codecov/codecov-action@6d798873df2b1b8e5846dba6fb86631229fbcb17 # v4.4.0

+ uses: codecov/codecov-action@671740ac38dd9b0130fbe1cec585b89eea48d3de # v5.5.2

if: failure() || success()

with:

name: unittests

files: coverage/unit_tests.coverprofile

- - name: Test kubo-as-a-library example

- run: |

- # we want to first test with the kubo version in the go.mod file

- go test -v ./...

-

- # we also want to test the examples against the current version of kubo

- # however, that version might be in a fork so we need to replace the dependency

-

- # backup the go.mod and go.sum files to restore them after we run the tests

- cp go.mod go.mod.bak

- cp go.sum go.sum.bak

-

- # make sure the examples run against the current version of kubo

- go mod edit -replace github.com/ipfs/kubo=./../../..

- go mod tidy

-

- go test -v ./...

-

- # restore the go.mod and go.sum files to their original state

- mv go.mod.bak go.mod

- mv go.sum.bak go.sum

- working-directory: docs/examples/kubo-as-a-library

+ token: ${{ secrets.CODECOV_TOKEN }}

+ fail_ci_if_error: false

- name: Create a proper JUnit XML report

uses: ipdxco/gotest-json-to-junit-xml@v1

with:

@@ -79,9 +57,9 @@ jobs:

output: test/unit/gotest.junit.xml

if: failure() || success()

- name: Archive the JUnit XML report

- uses: actions/upload-artifact@v4

+ uses: actions/upload-artifact@v6

with:

- name: unit

+ name: unit-tests-junit

path: test/unit/gotest.junit.xml

if: failure() || success()

- name: Create a HTML report

@@ -92,9 +70,9 @@ jobs:

output: test/unit/gotest.html

if: failure() || success()

- name: Archive the HTML report

- uses: actions/upload-artifact@v4

+ uses: actions/upload-artifact@v6

with:

- name: html

+ name: unit-tests-html

path: test/unit/gotest.html

if: failure() || success()

- name: Create a Markdown report

@@ -107,3 +85,86 @@ jobs:

- name: Set the summary

run: cat test/unit/gotest.md >> $GITHUB_STEP_SUMMARY

if: failure() || success()

+

+ # End-to-end integration/regression tests from test/cli

+ # (Go-based replacement for legacy test/sharness shell scripts)

+ cli-tests:

+ if: github.repository == 'ipfs/kubo' || github.event_name == 'workflow_dispatch'

+ runs-on: ${{ fromJSON(github.repository == 'ipfs/kubo' && '["self-hosted", "linux", "x64", "2xlarge"]' || '"ubuntu-latest"') }}

+ timeout-minutes: 15

+ env:

+ GOTRACEBACK: single # reduce noise on test timeout panics

+ TEST_VERBOSE: 1

+ GIT_PAGER: cat

+ IPFS_CHECK_RCMGR_DEFAULTS: 1

+ defaults:

+ run:

+ shell: bash

+ steps:

+ - name: Check out Kubo

+ uses: actions/checkout@v6

+ - name: Set up Go

+ uses: actions/setup-go@v6

+ with:

+ go-version-file: 'go.mod'

+ - name: Install missing tools

+ run: sudo apt update && sudo apt install -y zsh

+ - name: Run CLI tests

+ env:

+ IPFS_PATH: ${{ runner.temp }}/ipfs-test

+ run: make test_cli

+ - name: Create JUnit XML report

+ uses: ipdxco/gotest-json-to-junit-xml@v1

+ with:

+ input: test/cli/cli-tests.json

+ output: test/cli/cli-tests.junit.xml

+ if: failure() || success()

+ - name: Archive JUnit XML report

+ uses: actions/upload-artifact@v6

+ with:

+ name: cli-tests-junit

+ path: test/cli/cli-tests.junit.xml

+ if: failure() || success()

+ - name: Create HTML report

+ uses: ipdxco/junit-xml-to-html@v1

+ with:

+ mode: no-frames

+ input: test/cli/cli-tests.junit.xml

+ output: test/cli/cli-tests.html

+ if: failure() || success()

+ - name: Archive HTML report

+ uses: actions/upload-artifact@v6

+ with:

+ name: cli-tests-html

+ path: test/cli/cli-tests.html

+ if: failure() || success()

+ - name: Create Markdown report

+ uses: ipdxco/junit-xml-to-html@v1

+ with:

+ mode: summary

+ input: test/cli/cli-tests.junit.xml

+ output: test/cli/cli-tests.md

+ if: failure() || success()

+ - name: Set summary

+ run: cat test/cli/cli-tests.md >> $GITHUB_STEP_SUMMARY

+ if: failure() || success()

+

+ # Example tests (kubo-as-a-library)

+ example-tests:

+ if: github.repository == 'ipfs/kubo' || github.event_name == 'workflow_dispatch'

+ runs-on: ${{ fromJSON(github.repository == 'ipfs/kubo' && '["self-hosted", "linux", "x64", "2xlarge"]' || '"ubuntu-latest"') }}

+ timeout-minutes: 5

+ env:

+ GOTRACEBACK: single

+ defaults:

+ run:

+ shell: bash

+ steps:

+ - name: Check out Kubo

+ uses: actions/checkout@v6

+ - name: Set up Go

+ uses: actions/setup-go@v6

+ with:

+ go-version-file: 'go.mod'

+ - name: Run example tests

+ run: make test_examples

diff --git a/.github/workflows/interop.yml b/.github/workflows/interop.yml

index 2967c9997..25bdba4f2 100644

--- a/.github/workflows/interop.yml

+++ b/.github/workflows/interop.yml

@@ -9,9 +9,6 @@ on:

branches:

- 'master'

-env:

- GO_VERSION: 1.22.x

-

concurrency:

group: ${{ github.workflow }}-${{ github.event_name }}-${{ github.event_name == 'push' && github.sha || github.ref }}

cancel-in-progress: true

@@ -29,19 +26,18 @@ jobs:

TEST_DOCKER: 0

TEST_FUSE: 0

TEST_VERBOSE: 1

- TRAVIS: 1

GIT_PAGER: cat

IPFS_CHECK_RCMGR_DEFAULTS: 1

defaults:

run:

shell: bash

steps:

- - uses: actions/setup-go@v5

+ - uses: actions/checkout@v6

+ - uses: actions/setup-go@v6

with:

- go-version: ${{ env.GO_VERSION }}

- - uses: actions/checkout@v4

+ go-version-file: 'go.mod'

- run: make build

- - uses: actions/upload-artifact@v4

+ - uses: actions/upload-artifact@v6

with:

name: kubo

path: cmd/ipfs/ipfs

@@ -53,17 +49,17 @@ jobs:

run:

shell: bash

steps:

- - uses: actions/setup-node@v4

+ - uses: actions/setup-node@v6

with:

node-version: lts/*

- - uses: actions/download-artifact@v4

+ - uses: actions/download-artifact@v7

with:

name: kubo

path: cmd/ipfs

- run: chmod +x cmd/ipfs/ipfs

- run: echo "dir=$(npm config get cache)" >> $GITHUB_OUTPUT

id: npm-cache-dir

- - uses: actions/cache@v4

+ - uses: actions/cache@v5

with:

path: ${{ steps.npm-cache-dir.outputs.dir }}

key: ${{ runner.os }}-${{ github.job }}-helia-${{ hashFiles('**/package-lock.json') }}

@@ -82,29 +78,28 @@ jobs:

LIBP2P_TCP_REUSEPORT: false

LIBP2P_ALLOW_WEAK_RSA_KEYS: 1

E2E_IPFSD_TYPE: go

- TRAVIS: 1

GIT_PAGER: cat

IPFS_CHECK_RCMGR_DEFAULTS: 1

defaults:

run:

shell: bash

steps:

- - uses: actions/setup-node@v4

+ - uses: actions/setup-node@v6

with:

- node-version: 18.14.0

- - uses: actions/download-artifact@v4

+ node-version: 20.x

+ - uses: actions/download-artifact@v7

with:

name: kubo

path: cmd/ipfs

- run: chmod +x cmd/ipfs/ipfs

- - uses: actions/checkout@v4

+ - uses: actions/checkout@v6

with:

repository: ipfs/ipfs-webui

path: ipfs-webui

- run: |

echo "dir=$(npm config get cache)" >> $GITHUB_OUTPUT

id: npm-cache-dir

- - uses: actions/cache@v4

+ - uses: actions/cache@v5

with:

path: ${{ steps.npm-cache-dir.outputs.dir }}

key: ${{ runner.os }}-${{ github.job }}-${{ hashFiles('**/package-lock.json') }}

diff --git a/.github/workflows/sharness.yml b/.github/workflows/sharness.yml

index 6432745bf..ac32bf3a4 100644

--- a/.github/workflows/sharness.yml

+++ b/.github/workflows/sharness.yml

@@ -4,10 +4,10 @@ on:

workflow_dispatch:

pull_request:

paths-ignore:

- - '**/*.md'

+ - "**/*.md"

push:

branches:

- - 'master'

+ - "master"

concurrency:

group: ${{ github.workflow }}-${{ github.event_name }}-${{ github.event_name == 'push' && github.sha || github.ref }}

@@ -17,22 +17,22 @@ jobs:

sharness-test:

if: github.repository == 'ipfs/kubo' || github.event_name == 'workflow_dispatch'

runs-on: ${{ fromJSON(github.repository == 'ipfs/kubo' && '["self-hosted", "linux", "x64", "4xlarge"]' || '"ubuntu-latest"') }}

- timeout-minutes: 20

+ timeout-minutes: ${{ github.repository == 'ipfs/kubo' && 15 || 60 }}

defaults:

run:

shell: bash

steps:

- - name: Setup Go

- uses: actions/setup-go@v5

- with:

- go-version: 1.22.x

- name: Checkout Kubo

- uses: actions/checkout@v4

+ uses: actions/checkout@v6

with:

path: kubo

+ - name: Setup Go

+ uses: actions/setup-go@v6

+ with:

+ go-version-file: 'kubo/go.mod'

- name: Install missing tools

run: sudo apt update && sudo apt install -y socat net-tools fish libxml2-utils

- - uses: actions/cache@v4

+ - uses: actions/cache@v5

with:

path: test/sharness/lib/dependencies

key: ${{ runner.os }}-test-generate-junit-html-${{ hashFiles('test/sharness/lib/test-generate-junit-html.sh') }}

@@ -55,11 +55,13 @@ jobs:

# increasing parallelism beyond 10 doesn't speed up the tests much

PARALLEL: ${{ github.repository == 'ipfs/kubo' && 10 || 3 }}

- name: Upload coverage report

- uses: codecov/codecov-action@6d798873df2b1b8e5846dba6fb86631229fbcb17 # v4.4.0

+ uses: codecov/codecov-action@671740ac38dd9b0130fbe1cec585b89eea48d3de # v5.5.2

if: failure() || success()

with:

name: sharness

files: kubo/coverage/sharness_tests.coverprofile

+ token: ${{ secrets.CODECOV_TOKEN }}

+ fail_ci_if_error: false

- name: Aggregate results

run: find kubo/test/sharness/test-results -name 't*-*.sh.*.counts' | kubo/test/sharness/lib/sharness/aggregate-results.sh > kubo/test/sharness/test-results/summary.txt

- name: 👉️ If this step failed, go to «Summary» (top left) → «HTML Report» → inspect the «Failures» column

@@ -88,7 +90,7 @@ jobs:

destination: sharness.html

- name: Upload one-page HTML report

if: github.repository != 'ipfs/kubo' && (failure() || success())

- uses: actions/upload-artifact@v4

+ uses: actions/upload-artifact@v6

with:

name: sharness.html

path: kubo/test/sharness/test-results/sharness.html

@@ -108,7 +110,7 @@ jobs:

destination: sharness-html/

- name: Upload full HTML report

if: github.repository != 'ipfs/kubo' && (failure() || success())

- uses: actions/upload-artifact@v4

+ uses: actions/upload-artifact@v6

with:

name: sharness-html

path: kubo/test/sharness/test-results/sharness-html

diff --git a/.github/workflows/spellcheck.yml b/.github/workflows/spellcheck.yml

new file mode 100644

index 000000000..4eda8b222

--- /dev/null

+++ b/.github/workflows/spellcheck.yml

@@ -0,0 +1,18 @@

+name: Spell Check

+

+on:

+ pull_request:

+ push:

+ branches: ["master"]

+ workflow_dispatch:

+

+permissions:

+ contents: read

+

+concurrency:

+ group: ${{ github.workflow }}-${{ github.event_name }}-${{ github.event_name == 'push' && github.sha || github.ref }}

+ cancel-in-progress: true

+

+jobs:

+ spellcheck:

+ uses: ipdxco/unified-github-workflows/.github/workflows/reusable-spellcheck.yml@v1

diff --git a/.github/workflows/stale.yml b/.github/workflows/stale.yml

index 16d65d721..7c955c414 100644

--- a/.github/workflows/stale.yml

+++ b/.github/workflows/stale.yml

@@ -1,8 +1,9 @@

-name: Close and mark stale issue

+name: Close Stale Issues

on:

schedule:

- cron: '0 0 * * *'

+ workflow_dispatch:

permissions:

issues: write

@@ -10,4 +11,4 @@ permissions:

jobs:

stale:

- uses: pl-strflt/.github/.github/workflows/reusable-stale-issue.yml@v0.3

+ uses: ipdxco/unified-github-workflows/.github/workflows/reusable-stale-issue.yml@v1

diff --git a/.github/workflows/sync-release-assets.yml b/.github/workflows/sync-release-assets.yml

index 0d5c8199b..33869f11d 100644

--- a/.github/workflows/sync-release-assets.yml

+++ b/.github/workflows/sync-release-assets.yml

@@ -22,11 +22,11 @@ jobs:

- uses: ipfs/start-ipfs-daemon-action@v1

with:

args: --init --init-profile=flatfs,server --enable-gc=false

- - uses: actions/setup-node@v4

+ - uses: actions/setup-node@v6

with:

node-version: 14

- name: Sync the latest 5 github releases

- uses: actions/github-script@v7

+ uses: actions/github-script@v8

with:

script: |

const fs = require('fs').promises

diff --git a/.github/workflows/test-migrations.yml b/.github/workflows/test-migrations.yml

new file mode 100644

index 000000000..35fcbe729

--- /dev/null

+++ b/.github/workflows/test-migrations.yml

@@ -0,0 +1,85 @@

+name: Migrations

+

+on:

+ workflow_dispatch:

+ pull_request:

+ paths:

+ # Migration implementation files

+ - 'repo/fsrepo/migrations/**'

+ - 'test/cli/migrations/**'

+ # Config and repo handling

+ - 'repo/fsrepo/**'

+ # This workflow file itself

+ - '.github/workflows/test-migrations.yml'

+ push:

+ branches:

+ - 'master'

+ - 'release-*'

+ paths:

+ - 'repo/fsrepo/migrations/**'

+ - 'test/cli/migrations/**'

+ - 'repo/fsrepo/**'

+ - '.github/workflows/test-migrations.yml'

+

+concurrency:

+ group: ${{ github.workflow }}-${{ github.event_name }}-${{ github.event_name == 'push' && github.sha || github.ref }}

+ cancel-in-progress: true

+

+jobs:

+ test:

+ strategy:

+ fail-fast: false

+ matrix:

+ os: [ubuntu-latest, windows-latest, macos-latest]

+ runs-on: ${{ matrix.os }}

+ timeout-minutes: 20

+ env:

+ TEST_VERBOSE: 1

+ IPFS_CHECK_RCMGR_DEFAULTS: 1

+ defaults:

+ run:

+ shell: bash

+ steps:

+ - name: Check out Kubo

+ uses: actions/checkout@v6

+

+ - name: Set up Go

+ uses: actions/setup-go@v6

+ with:

+ go-version-file: 'go.mod'

+

+ - name: Build kubo binary

+ run: |

+ make build

+ echo "Built ipfs binary at $(pwd)/cmd/ipfs/"

+

+ - name: Add kubo to PATH

+ run: |

+ echo "$(pwd)/cmd/ipfs" >> $GITHUB_PATH

+

+ - name: Verify ipfs in PATH

+ run: |

+ which ipfs || echo "ipfs not in PATH"

+ ipfs version || echo "Failed to run ipfs version"

+

+ - name: Run migration unit tests

+ run: |

+ go test ./repo/fsrepo/migrations/...

+

+ - name: Run CLI migration tests

+ env:

+ IPFS_PATH: ${{ runner.temp }}/ipfs-test

+ run: |

+ export PATH="${{ github.workspace }}/cmd/ipfs:$PATH"

+ which ipfs || echo "ipfs not found in PATH"

+ ipfs version || echo "Failed to run ipfs version"

+ go test ./test/cli/migrations/...

+

+ - name: Upload test results

+ if: always()

+ uses: actions/upload-artifact@v6

+ with:

+ name: ${{ matrix.os }}-test-results

+ path: |

+ test/**/*.log

+ ${{ runner.temp }}/ipfs-test/

diff --git a/.gitignore b/.gitignore

index cb147456b..890870a6e 100644

--- a/.gitignore

+++ b/.gitignore

@@ -28,6 +28,11 @@ go-ipfs-source.tar.gz

docs/examples/go-ipfs-as-a-library/example-folder/Qm*

/test/sharness/t0054-dag-car-import-export-data/*.car

+# test artifacts from make test_unit / test_cli

+/test/unit/gotest.json

+/test/unit/gotest.junit.xml

+/test/cli/cli-tests.json

+

# ignore build output from snapcraft

/ipfs_*.snap

/parts

diff --git a/.hadolint.yaml b/.hadolint.yaml

new file mode 100644

index 000000000..78b3d23bf

--- /dev/null

+++ b/.hadolint.yaml

@@ -0,0 +1,13 @@

+# Hadolint configuration for Kubo Docker image

+# https://github.com/hadolint/hadolint

+

+# Ignore specific rules

+ignored:

+ # DL3008: Pin versions in apt-get install

+ # We use stable base images and prefer smaller layers over version pinning

+ - DL3008

+

+# Trust base images from these registries

+trustedRegistries:

+ - docker.io

+ - gcr.io

\ No newline at end of file

diff --git a/CHANGELOG.md b/CHANGELOG.md

index fa40e1625..6bc565d86 100644

--- a/CHANGELOG.md

+++ b/CHANGELOG.md

@@ -1,5 +1,14 @@

# Kubo Changelogs

+- [v0.40](docs/changelogs/v0.40.md)

+- [v0.39](docs/changelogs/v0.39.md)

+- [v0.38](docs/changelogs/v0.38.md)

+- [v0.37](docs/changelogs/v0.37.md)

+- [v0.36](docs/changelogs/v0.36.md)

+- [v0.35](docs/changelogs/v0.35.md)

+- [v0.34](docs/changelogs/v0.34.md)

+- [v0.33](docs/changelogs/v0.33.md)

+- [v0.32](docs/changelogs/v0.32.md)

- [v0.31](docs/changelogs/v0.31.md)

- [v0.30](docs/changelogs/v0.30.md)

- [v0.29](docs/changelogs/v0.29.md)

diff --git a/CONTRIBUTING.md b/CONTRIBUTING.md

index 1db5ca246..ed9001df2 100644

--- a/CONTRIBUTING.md

+++ b/CONTRIBUTING.md

@@ -1,6 +1,10 @@

-IPFS as a project, including go-ipfs and all of its modules, follows the [standard IPFS Community contributing guidelines](https://github.com/ipfs/community/blob/master/CONTRIBUTING.md).

+# Contributing to Kubo

-We also adhere to the [GO IPFS Community contributing guidelines](https://github.com/ipfs/community/blob/master/CONTRIBUTING_GO.md) which provide additional information of how to collaborate and contribute in the Go implementation of IPFS.

+**For development setup, building, and testing, see the [Developer Guide](docs/developer-guide.md).**

+

+IPFS as a project, including Kubo and all of its modules, follows the [standard IPFS Community contributing guidelines](https://github.com/ipfs/community/blob/master/CONTRIBUTING.md).

+

+We also adhere to the [Go IPFS Community contributing guidelines](https://github.com/ipfs/community/blob/master/CONTRIBUTING_GO.md) which provide additional information on how to collaborate and contribute to the Go implementation of IPFS.

We appreciate your time and attention for going over these. Please open an issue on ipfs/community if you have any questions.

diff --git a/Dockerfile b/Dockerfile

index 4ed07d3d4..6d43beefa 100644

--- a/Dockerfile

+++ b/Dockerfile

@@ -1,13 +1,16 @@

-FROM --platform=${BUILDPLATFORM:-linux/amd64} golang:1.22 AS builder

+# syntax=docker/dockerfile:1

+# Enables BuildKit with cache mounts for faster builds

+FROM --platform=${BUILDPLATFORM:-linux/amd64} golang:1.25 AS builder

ARG TARGETOS TARGETARCH

-ENV SRC_DIR /kubo

+ENV SRC_DIR=/kubo

-# Download packages first so they can be cached.

+# Cache go module downloads between builds for faster rebuilds

COPY go.mod go.sum $SRC_DIR/

-RUN cd $SRC_DIR \

- && go mod download

+WORKDIR $SRC_DIR

+RUN --mount=type=cache,target=/go/pkg/mod \

+ go mod download

COPY . $SRC_DIR

@@ -18,92 +21,78 @@ ARG IPFS_PLUGINS

# Allow for other targets to be built, e.g.: docker build --build-arg MAKE_TARGET="nofuse"

ARG MAKE_TARGET=build

-# Build the thing.

-# Also: fix getting HEAD commit hash via git rev-parse.

-RUN cd $SRC_DIR \

- && mkdir -p .git/objects \

+# Build ipfs binary with cached go modules and build cache.

+# mkdir .git/objects allows git rev-parse to read commit hash for version info

+RUN --mount=type=cache,target=/go/pkg/mod \

+ --mount=type=cache,target=/root/.cache/go-build \

+ mkdir -p .git/objects \

&& GOOS=$TARGETOS GOARCH=$TARGETARCH GOFLAGS=-buildvcs=false make ${MAKE_TARGET} IPFS_PLUGINS=$IPFS_PLUGINS

-# Using Debian Buster because the version of busybox we're using is based on it

-# and we want to make sure the libraries we're using are compatible. That's also

-# why we're running this for the target platform.

-FROM debian:stable-slim AS utilities

+# Extract required runtime tools from Debian.

+# We use Debian instead of Alpine because we need glibc compatibility

+# for the busybox base image we're using.

+FROM debian:bookworm-slim AS utilities

RUN set -eux; \

apt-get update; \

- apt-get install -y \

+ apt-get install -y --no-install-recommends \

tini \

# Using gosu (~2MB) instead of su-exec (~20KB) because it's easier to

# install on Debian. Useful links:

# - https://github.com/ncopa/su-exec#why-reinvent-gosu

# - https://github.com/tianon/gosu/issues/52#issuecomment-441946745

gosu \

- # This installs fusermount which we later copy over to the target image.

+ # fusermount enables IPFS mount commands

fuse \

ca-certificates \

; \

- rm -rf /var/lib/apt/lists/*

+ apt-get clean; \

+ rm -rf /var/lib/apt/lists/* /tmp/* /var/tmp/*

-# Now comes the actual target image, which aims to be as small as possible.

+# Final minimal image with shell for debugging (busybox provides sh)

FROM busybox:stable-glibc

-# Get the ipfs binary, entrypoint script, and TLS CAs from the build container.

-ENV SRC_DIR /kubo

+# Copy ipfs binary, startup scripts, and runtime dependencies

+ENV SRC_DIR=/kubo

COPY --from=utilities /usr/sbin/gosu /sbin/gosu

COPY --from=utilities /usr/bin/tini /sbin/tini

COPY --from=utilities /bin/fusermount /usr/local/bin/fusermount

COPY --from=utilities /etc/ssl/certs /etc/ssl/certs

COPY --from=builder $SRC_DIR/cmd/ipfs/ipfs /usr/local/bin/ipfs

-COPY --from=builder $SRC_DIR/bin/container_daemon /usr/local/bin/start_ipfs

+COPY --from=builder --chmod=755 $SRC_DIR/bin/container_daemon /usr/local/bin/start_ipfs

COPY --from=builder $SRC_DIR/bin/container_init_run /usr/local/bin/container_init_run

-# Add suid bit on fusermount so it will run properly

+# Set SUID for fusermount to enable FUSE mounting by non-root user

RUN chmod 4755 /usr/local/bin/fusermount

-# Fix permissions on start_ipfs (ignore the build machine's permissions)

-RUN chmod 0755 /usr/local/bin/start_ipfs

-

-# Swarm TCP; should be exposed to the public

-EXPOSE 4001

-# Swarm UDP; should be exposed to the public

-EXPOSE 4001/udp

-# Daemon API; must not be exposed publicly but to client services under you control

+# Swarm P2P port (TCP/UDP) - expose publicly for peer connections

+EXPOSE 4001 4001/udp

+# API port - keep private, only for trusted clients

EXPOSE 5001

-# Web Gateway; can be exposed publicly with a proxy, e.g. as https://ipfs.example.org

+# Gateway port - can be exposed publicly via reverse proxy

EXPOSE 8080

-# Swarm Websockets; must be exposed publicly when the node is listening using the websocket transport (/ipX/.../tcp/8081/ws).

+# Swarm WebSockets - expose publicly for browser-based peers

EXPOSE 8081

-# Create the fs-repo directory and switch to a non-privileged user.

-ENV IPFS_PATH /data/ipfs

-RUN mkdir -p $IPFS_PATH \

+# Create ipfs user (uid 1000) and required directories with proper ownership

+ENV IPFS_PATH=/data/ipfs

+RUN mkdir -p $IPFS_PATH /ipfs /ipns /mfs /container-init.d \

&& adduser -D -h $IPFS_PATH -u 1000 -G users ipfs \

- && chown ipfs:users $IPFS_PATH

+ && chown ipfs:users $IPFS_PATH /ipfs /ipns /mfs /container-init.d

-# Create mount points for `ipfs mount` command

-RUN mkdir /ipfs /ipns \

- && chown ipfs:users /ipfs /ipns

-

-# Create the init scripts directory

-RUN mkdir /container-init.d \

- && chown ipfs:users /container-init.d

-

-# Expose the fs-repo as a volume.

-# start_ipfs initializes an fs-repo if none is mounted.

-# Important this happens after the USER directive so permissions are correct.

+# Volume for IPFS repository data persistence

VOLUME $IPFS_PATH

# The default logging level

-ENV IPFS_LOGGING ""

+ENV GOLOG_LOG_LEVEL=""

-# This just makes sure that:

-# 1. There's an fs-repo, and initializes one if there isn't.

-# 2. The API and Gateway are accessible from outside the container.

+# Entrypoint initializes IPFS repo if needed and configures networking.

+# tini ensures proper signal handling and zombie process cleanup

ENTRYPOINT ["/sbin/tini", "--", "/usr/local/bin/start_ipfs"]

-# Healthcheck for the container

-# QmUNLLsPACCz1vLxQVkXqqLX5R1X345qqfHbsf67hvA3Nn is the CID of empty folder

+# Health check verifies IPFS daemon is responsive.

+# Uses empty directory CID (QmUNLLsPACCz1vLxQVkXqqLX5R1X345qqfHbsf67hvA3Nn) as test

HEALTHCHECK --interval=30s --timeout=3s --start-period=5s --retries=3 \

CMD ipfs --api=/ip4/127.0.0.1/tcp/5001 dag stat /ipfs/QmUNLLsPACCz1vLxQVkXqqLX5R1X345qqfHbsf67hvA3Nn || exit 1

-# Execute the daemon subcommand by default

+# Default: run IPFS daemon with auto-migration enabled

CMD ["daemon", "--migrate=true", "--agent-version-suffix=docker"]

diff --git a/FUNDING.json b/FUNDING.json

new file mode 100644

index 000000000..9085792a6

--- /dev/null

+++ b/FUNDING.json

@@ -0,0 +1,5 @@

+{

+ "opRetro": {

+ "projectId": "0x7f330267969cf845a983a9d4e7b7dbcca5c700a5191269af377836d109e0bb69"

+ }

+}

diff --git a/README.md b/README.md

index 30a884e96..c1eaf9748 100644

--- a/README.md

+++ b/README.md

@@ -1,8 +1,8 @@

-  +

+

- Kubo: IPFS Implementation in GO

+ Kubo: IPFS Implementation in Go

@@ -11,488 +11,214 @@

-

-  -

-  +

+  +

+

-

-

+

+What is Kubo? | Quick Taste | Install | Documentation | Development | Getting Help

+

+

## What is Kubo?

-Kubo was the first IPFS implementation and is the most widely used one today. Implementing the *Interplanetary Filesystem* - the Web3 standard for content-addressing, interoperable with HTTP. Thus powered by IPLD's data models and the libp2p for network communication. Kubo is written in Go.

+Kubo was the first [IPFS](https://docs.ipfs.tech/concepts/what-is-ipfs/) implementation and is the [most widely used one today](https://probelab.io/ipfs/topology/#chart-agent-types-avg). It takes an opinionated approach to content-addressing ([CIDs](https://docs.ipfs.tech/concepts/glossary/#cid), [DAGs](https://docs.ipfs.tech/concepts/glossary/#dag)) that maximizes interoperability: [UnixFS](https://docs.ipfs.tech/concepts/glossary/#unixfs) for files and directories, [HTTP Gateways](https://docs.ipfs.tech/concepts/glossary/#gateway) for web browsers, [Bitswap](https://docs.ipfs.tech/concepts/glossary/#bitswap) and [HTTP](https://specs.ipfs.tech/http-gateways/trustless-gateway/) for verifiable data transfer.

-Featureset

-- Runs an IPFS-Node as a network service that is part of LAN and WAN DHT

-- [HTTP Gateway](https://specs.ipfs.tech/http-gateways/) (`/ipfs` and `/ipns`) functionality for trusted and [trustless](https://docs.ipfs.tech/reference/http/gateway/#trustless-verifiable-retrieval) content retrieval

-- [HTTP Routing V1](https://specs.ipfs.tech/routing/http-routing-v1/) (`/routing/v1`) client and server implementation for [delegated routing](./docs/delegated-routing.md) lookups

-- [HTTP Kubo RPC API](https://docs.ipfs.tech/reference/kubo/rpc/) (`/api/v0`) to access and control the daemon

-- [Command Line Interface](https://docs.ipfs.tech/reference/kubo/cli/) based on (`/api/v0`) RPC API

-- [WebUI](https://github.com/ipfs/ipfs-webui/#readme) to manage the Kubo node

-- [Content blocking](/docs/content-blocking.md) support for operators of public nodes

+**Features:**

-### Other implementations

+- Runs an IPFS node as a network service (LAN [mDNS](https://github.com/libp2p/specs/blob/master/discovery/mdns.md) and WAN [Amino DHT](https://docs.ipfs.tech/concepts/glossary/#dht))

+- [Command-line interface](https://docs.ipfs.tech/reference/kubo/cli/) (`ipfs --help`)

+- [WebUI](https://github.com/ipfs/ipfs-webui/#readme) for node management

+- [HTTP Gateway](https://specs.ipfs.tech/http-gateways/) for trusted and [trustless](https://docs.ipfs.tech/reference/http/gateway/#trustless-verifiable-retrieval) content retrieval

+- [HTTP RPC API](https://docs.ipfs.tech/reference/kubo/rpc/) to control the daemon

+- [HTTP Routing V1](https://specs.ipfs.tech/routing/http-routing-v1/) client and server for [delegated routing](./docs/delegated-routing.md)

+- [Content blocking](./docs/content-blocking.md) for public node operators

-See [List](https://docs.ipfs.tech/basics/ipfs-implementations/)

+**Other IPFS implementations:** [Helia](https://github.com/ipfs/helia) (JavaScript), [more...](https://docs.ipfs.tech/concepts/ipfs-implementations/)

-## What is IPFS?

+## Quick Taste

-IPFS is a global, versioned, peer-to-peer filesystem. It combines good ideas from previous systems such as Git, BitTorrent, Kademlia, SFS, and the Web. It is like a single BitTorrent swarm, exchanging git objects. IPFS provides an interface as simple as the HTTP web, but with permanence built-in. You can also mount the world at /ipfs.

+After [installing Kubo](#install), verify it works:

-For more info see: https://docs.ipfs.tech/concepts/what-is-ipfs/

+```console

+$ ipfs init

+generating ED25519 keypair...done

+peer identity: 12D3KooWGcSLQdLDBi2BvoP8WnpdHvhWPbxpGcqkf93rL2XMZK7R

-Before opening an issue, consider using one of the following locations to ensure you are opening your thread in the right place:

- - kubo (previously named go-ipfs) _implementation_ bugs in [this repo](https://github.com/ipfs/kubo/issues).

- - Documentation issues in [ipfs/docs issues](https://github.com/ipfs/ipfs-docs/issues).

- - IPFS _design_ in [ipfs/specs issues](https://github.com/ipfs/specs/issues).

- - Exploration of new ideas in [ipfs/notes issues](https://github.com/ipfs/notes/issues).

- - Ask questions and meet the rest of the community at the [IPFS Forum](https://discuss.ipfs.tech).

- - Or [chat with us](https://docs.ipfs.tech/community/chat/).

+$ ipfs daemon &

+Daemon is ready

-[](https://www.youtube.com/channel/UCdjsUXJ3QawK4O5L1kqqsew) [](https://twitter.com/IPFS)

+$ echo "hello IPFS" | ipfs add -q --cid-version 1

+bafkreicouv3sksjuzxb3rbb6rziy6duakk2aikegsmtqtz5rsuppjorxsa

-## Next milestones

+$ ipfs cat bafkreicouv3sksjuzxb3rbb6rziy6duakk2aikegsmtqtz5rsuppjorxsa

+hello IPFS

+```

-[Milestones on GitHub](https://github.com/ipfs/kubo/milestones)

+Verify this CID is provided by your node to the IPFS network:

-

-## Table of Contents

-

-- [What is Kubo?](#what-is-kubo)

-- [What is IPFS?](#what-is-ipfs)

-- [Next milestones](#next-milestones)

-- [Table of Contents](#table-of-contents)

-- [Security Issues](#security-issues)

-- [Minimal System Requirements](#minimal-system-requirements)

-- [Install](#install)

- - [Docker](#docker)

- - [Official prebuilt binaries](#official-prebuilt-binaries)

- - [Updating](#updating)

- - [Using ipfs-update](#using-ipfs-update)

- - [Downloading builds using IPFS](#downloading-builds-using-ipfs)

- - [Unofficial Linux packages](#unofficial-linux-packages)

- - [ArchLinux](#arch-linux)

- - [Gentoo Linux](#gentoo-linux)

- - [Nix](#nix)

- - [Solus](#solus)

- - [openSUSE](#opensuse)

- - [Guix](#guix)

- - [Snap](#snap)

- - [Ubuntu PPA](#ubuntu-ppa)

- - [Unofficial Windows packages](#unofficial-windows-packages)

- - [Chocolatey](#chocolatey)

- - [Scoop](#scoop)

- - [Unofficial MacOS packages](#unofficial-macos-packages)

- - [MacPorts](#macports)

- - [Nix](#nix-macos)

- - [Homebrew](#homebrew)

- - [Build from Source](#build-from-source)

- - [Install Go](#install-go)

- - [Download and Compile IPFS](#download-and-compile-ipfs)

- - [Cross Compiling](#cross-compiling)

- - [Troubleshooting](#troubleshooting)

-- [Getting Started](#getting-started)

- - [Usage](#usage)

- - [Some things to try](#some-things-to-try)

- - [Troubleshooting](#troubleshooting-1)

-- [Packages](#packages)

-- [Development](#development)

- - [Map of Implemented Subsystems](#map-of-implemented-subsystems)

- - [CLI, HTTP-API, Architecture Diagram](#cli-http-api-architecture-diagram)

- - [Testing](#testing)

- - [Development Dependencies](#development-dependencies)

- - [Developer Notes](#developer-notes)

-- [Maintainer Info](#maintainer-info)

-- [Contributing](#contributing)

-- [License](#license)

-

-## Security Issues

-

-Please follow [`SECURITY.md`](SECURITY.md).

-

-### Minimal System Requirements

-

-IPFS can run on most Linux, macOS, and Windows systems. We recommend running it on a machine with at least 4 GB of RAM and 2 CPU cores (kubo is highly parallel). On systems with less memory, it may not be completely stable, and you run on your own risk.

+See `ipfs add --help` for all import options. Ready for more? Follow the [command-line quick start](https://docs.ipfs.tech/how-to/command-line-quick-start/).

## Install

-The canonical download instructions for IPFS are over at: https://docs.ipfs.tech/install/. It is **highly recommended** you follow those instructions if you are not interested in working on IPFS development.

+Follow the [official installation guide](https://docs.ipfs.tech/install/command-line/), or choose: [prebuilt binary](#official-prebuilt-binaries) | [Docker](#docker) | [package manager](#package-managers) | [from source](#build-from-source).

+

+Prefer a GUI? Try [IPFS Desktop](https://docs.ipfs.tech/install/ipfs-desktop/) and/or [IPFS Companion](https://docs.ipfs.tech/install/ipfs-companion/).

+

+### Minimal System Requirements

+

+Kubo runs on most Linux, macOS, and Windows systems. For optimal performance, we recommend at least 6 GB of RAM and 2 CPU cores (more is ideal, as Kubo is highly parallel).

+

+> [!IMPORTANT]

+> Larger pinsets require additional memory, with an estimated ~1 GiB of RAM per 20 million items for reproviding to the Amino DHT.

+

+> [!CAUTION]

+> Systems with less than the recommended memory may experience instability, frequent OOM errors or restarts, and missing data announcement (reprovider window), which can make data fully or partially inaccessible to other peers. Running Kubo on underprovisioned hardware is at your own risk.

+

+### Official Prebuilt Binaries

+

+Download from https://dist.ipfs.tech#kubo or [GitHub Releases](https://github.com/ipfs/kubo/releases/latest).

### Docker

-Official images are published at https://hub.docker.com/r/ipfs/kubo/:

+Official images are published at https://hub.docker.com/r/ipfs/kubo/: [](https://hub.docker.com/r/ipfs/kubo/)

-[](https://hub.docker.com/r/ipfs/kubo/)

+#### 🟢 Release Images

-- 🟢 Releases

- - `latest` and `release` tags always point at [the latest stable release](https://github.com/ipfs/kubo/releases/latest)

- - `vN.N.N` points at a specific [release tag](https://github.com/ipfs/kubo/releases)

- - These are production grade images.

-- 🟠 We also provide experimental developer builds

- - `master-latest` always points at the `HEAD` of the `master` branch

- - `master-YYYY-DD-MM-GITSHA` points at a specific commit from the `master` branch

- - These tags are used by developers for internal testing, not intended for end users or production use.

+Use these for production deployments.

+

+- `latest` and [`release`](https://hub.docker.com/r/ipfs/kubo/tags?name=release) always point at [the latest stable release](https://github.com/ipfs/kubo/releases/latest)

+- [`vN.N.N`](https://hub.docker.com/r/ipfs/kubo/tags?name=v) points at a specific [release tag](https://github.com/ipfs/kubo/releases)

```console

$ docker pull ipfs/kubo:latest

$ docker run --rm -it --net=host ipfs/kubo:latest

```

-To [customize your node](https://docs.ipfs.tech/install/run-ipfs-inside-docker/#customizing-your-node),

-pass necessary config via `-e` or by mounting scripts in the `/container-init.d`.

+To [customize your node](https://docs.ipfs.tech/install/run-ipfs-inside-docker/#customizing-your-node), pass config via `-e` or mount scripts in `/container-init.d`.

-Learn more at https://docs.ipfs.tech/install/run-ipfs-inside-docker/

+#### 🟠 Developer Preview Images

-### Official prebuilt binaries

+For internal testing, not intended for production.

-The official binaries are published at https://dist.ipfs.tech#kubo:

+- [`master-latest`](https://hub.docker.com/r/ipfs/kubo/tags?name=master-latest) points at `HEAD` of [`master`](https://github.com/ipfs/kubo/commits/master/)

+- [`master-YYYY-DD-MM-GITSHA`](https://hub.docker.com/r/ipfs/kubo/tags?name=master-2) points at a specific commit

-[](https://dist.ipfs.tech#kubo)

+#### 🔴 Internal Staging Images

-From there:

-- Click the blue "Download Kubo" on the right side of the page.

-- Open/extract the archive.

-- Move kubo (`ipfs`) to your path (`install.sh` can do it for you).

+For testing arbitrary commits and experimental patches (force push to `staging` branch).

-If you are unable to access [dist.ipfs.tech](https://dist.ipfs.tech#kubo), you can also download kubo (go-ipfs) from:

-- this project's GitHub [releases](https://github.com/ipfs/kubo/releases/latest) page

-- `/ipns/dist.ipfs.tech` at [dweb.link](https://dweb.link/ipns/dist.ipfs.tech#kubo) gateway

-

-#### Updating

-

-##### Using ipfs-update

-

-IPFS has an updating tool that can be accessed through `ipfs update`. The tool is

-not installed alongside IPFS in order to keep that logic independent of the main

-codebase. To install `ipfs-update` tool, [download it here](https://dist.ipfs.tech/#ipfs-update).

-

-##### Downloading builds using IPFS

-

-List the available versions of Kubo (go-ipfs) implementation:

-

-```console

-$ ipfs cat /ipns/dist.ipfs.tech/kubo/versions

-```

-

-Then, to view available builds for a version from the previous command (`$VERSION`):

-

-```console

-$ ipfs ls /ipns/dist.ipfs.tech/kubo/$VERSION

-```

-

-To download a given build of a version:

-

-```console

-$ ipfs get /ipns/dist.ipfs.tech/kubo/$VERSION/kubo_$VERSION_darwin-386.tar.gz # darwin 32-bit build

-$ ipfs get /ipns/dist.ipfs.tech/kubo/$VERSION/kubo_$VERSION_darwin-amd64.tar.gz # darwin 64-bit build

-$ ipfs get /ipns/dist.ipfs.tech/kubo/$VERSION/kubo_$VERSION_freebsd-amd64.tar.gz # freebsd 64-bit build

-$ ipfs get /ipns/dist.ipfs.tech/kubo/$VERSION/kubo_$VERSION_linux-386.tar.gz # linux 32-bit build

-$ ipfs get /ipns/dist.ipfs.tech/kubo/$VERSION/kubo_$VERSION_linux-amd64.tar.gz # linux 64-bit build

-$ ipfs get /ipns/dist.ipfs.tech/kubo/$VERSION/kubo_$VERSION_linux-arm.tar.gz # linux arm build

-$ ipfs get /ipns/dist.ipfs.tech/kubo/$VERSION/kubo_$VERSION_windows-amd64.zip # windows 64-bit build

-```

-

-### Unofficial Linux packages

-

-

-  -

-

-- [ArchLinux](#arch-linux)

-- [Gentoo Linux](#gentoo-linux)

-- [Nix](#nix-linux)

-- [Solus](#solus)

-- [openSUSE](#opensuse)

-- [Guix](#guix)

-- [Snap](#snap)

-- [Ubuntu PPA](#ubuntu-ppa)

-

-#### Arch Linux

-

-[](https://wiki.archlinux.org/title/IPFS)

-

-```bash

-# pacman -S kubo

-```

-

-[](https://aur.archlinux.org/packages/kubo/)

-

-#### Gentoo Linux

-

-https://wiki.gentoo.org/wiki/Kubo

-

-```bash

-# emerge -a net-p2p/kubo

-```

-

-https://packages.gentoo.org/packages/net-p2p/kubo

-

-#### Nix

-

-With the purely functional package manager [Nix](https://nixos.org/nix/) you can install kubo (go-ipfs) like this:

-

-```

-$ nix-env -i kubo

-```

-

-You can also install the Package by using its attribute name, which is also `kubo`.

-

-#### Solus

-

-[Package for Solus](https://dev.getsol.us/source/kubo/repository/master/)

-

-```

-$ sudo eopkg install kubo

-```

-

-You can also install it through the Solus software center.

-

-#### openSUSE

-

-[Community Package for go-ipfs](https://software.opensuse.org/package/go-ipfs)

-

-#### Guix

-

-[Community Package for go-ipfs](https://packages.guix.gnu.org/packages/go-ipfs/0.11.0/) is no out-of-date.

-

-#### Snap

-

-No longer supported, see rationale in [kubo#8688](https://github.com/ipfs/kubo/issues/8688).

-

-#### Ubuntu PPA

-

-[PPA homepage](https://launchpad.net/~twdragon/+archive/ubuntu/ipfs) on Launchpad.

-

-##### Latest Ubuntu (>= 20.04 LTS)

-```sh

-sudo add-apt-repository ppa:twdragon/ipfs

-sudo apt update

-sudo apt install ipfs-kubo

-```

-

-##### Any Ubuntu version

-

-```sh

-sudo su

-echo 'deb https://ppa.launchpadcontent.net/twdragon/ipfs/ubuntu <> main' >> /etc/apt/sources.list.d/ipfs

-echo 'deb-src https://ppa.launchpadcontent.net/twdragon/ipfs/ubuntu <> main' >> /etc/apt/sources.list.d/ipfs

-exit

-sudo apt update

-sudo apt install ipfs-kubo

-```

-where `<>` is the codename of your Ubuntu distribution (for example, `jammy` for 22.04 LTS). During the first installation the package maintenance script may automatically ask you about which networking profile, CPU accounting model, and/or existing node configuration file you want to use.

-

-**NOTE**: this method also may work with any compatible Debian-based distro which has `libc6` inside, and APT as a package manager.

-

-### Unofficial Windows packages

-

-- [Chocolatey](#chocolatey)

-- [Scoop](#scoop)

-

-#### Chocolatey

-

-No longer supported, see rationale in [kubo#9341](https://github.com/ipfs/kubo/issues/9341).

-

-#### Scoop

-

-Scoop provides kubo as `kubo` in its 'extras' bucket.

-

-```Powershell

-PS> scoop bucket add extras

-PS> scoop install kubo

-```

-

-### Unofficial macOS packages

-

-- [MacPorts](#macports)

-- [Nix](#nix-macos)

-- [Homebrew](#homebrew)

-

-#### MacPorts

-

-The package [ipfs](https://ports.macports.org/port/ipfs) currently points to kubo (go-ipfs) and is being maintained.

-

-```

-$ sudo port install ipfs

-```

-

-#### Nix

-

-In macOS you can use the purely functional package manager [Nix](https://nixos.org/nix/):

-

-```

-$ nix-env -i kubo

-```

-

-You can also install the Package by using its attribute name, which is also `kubo`.

-

-#### Homebrew

-

-A Homebrew formula [ipfs](https://formulae.brew.sh/formula/ipfs) is maintained too.

-

-```

-$ brew install --formula ipfs

-```

+- [`staging-latest`](https://hub.docker.com/r/ipfs/kubo/tags?name=staging-latest) points at `HEAD` of [`staging`](https://github.com/ipfs/kubo/commits/staging/)

+- [`staging-YYYY-DD-MM-GITSHA`](https://hub.docker.com/r/ipfs/kubo/tags?name=staging-2) points at a specific commit

### Build from Source

-kubo's build system requires Go and some standard POSIX build tools:

-

-* GNU make

-* Git

-* GCC (or some other go compatible C Compiler) (optional)

-

-To build without GCC, build with `CGO_ENABLED=0` (e.g., `make build CGO_ENABLED=0`).

-

-#### Install Go

-

-

-

-If you need to update: [Download latest version of Go](https://golang.org/dl/).

-

-You'll need to add Go's bin directories to your `$PATH` environment variable e.g., by adding these lines to your `/etc/profile` (for a system-wide installation) or `$HOME/.profile`:

-

-```

-export PATH=$PATH:/usr/local/go/bin

-export PATH=$PATH:$GOPATH/bin

+```bash

+git clone https://github.com/ipfs/kubo.git

+cd kubo

+make build # creates cmd/ipfs/ipfs

+make install # installs to $GOPATH/bin/ipfs

```

-(If you run into trouble, see the [Go install instructions](https://golang.org/doc/install)).

+See the [Developer Guide](docs/developer-guide.md) for details, Windows instructions, and troubleshooting.

-#### Download and Compile IPFS

+### Package Managers

-```

-$ git clone https://github.com/ipfs/kubo.git

+Kubo is available in community-maintained packages across many operating systems, Linux distributions, and package managers. See [Repology](https://repology.org/project/kubo/versions) for the full list: [](https://repology.org/project/kubo/versions)

-$ cd kubo

-$ make install

-```

+> [!WARNING]

+> These packages are maintained by third-party volunteers. The IPFS Project and Kubo maintainers are not responsible for their contents or supply chain security. For increased security, [build from source](#build-from-source).

-Alternatively, you can run `make build` to build the go-ipfs binary (storing it in `cmd/ipfs/ipfs`) without installing it.

+#### Linux

-**NOTE:** If you get an error along the lines of "fatal error: stdlib.h: No such file or directory", you're missing a C compiler. Either re-run `make` with `CGO_ENABLED=0` or install GCC.

+| Distribution | Install | Version |

+|--------------|---------|---------|

+| Ubuntu | [PPA](https://launchpad.net/~twdragon/+archive/ubuntu/ipfs): `sudo apt install ipfs-kubo` | [](https://launchpad.net/~twdragon/+archive/ubuntu/ipfs) |

+| Arch | `pacman -S kubo` | [](https://archlinux.org/packages/extra/x86_64/kubo/) |

+| Fedora | [COPR](https://copr.fedorainfracloud.org/coprs/taw/ipfs/): `dnf install kubo` | [](https://copr.fedorainfracloud.org/coprs/taw/ipfs/) |

+| Nix | `nix-env -i kubo` | [](https://search.nixos.org/packages?query=kubo) |

+| Gentoo | `emerge -a net-p2p/kubo` | [](https://packages.gentoo.org/packages/net-p2p/kubo) |

+| openSUSE | `zypper install kubo` | [](https://software.opensuse.org/package/kubo) |

+| Solus | `sudo eopkg install kubo` | [](https://packages.getsol.us/shannon/k/kubo/) |

+| Guix | `guix install kubo` | [](https://packages.guix.gnu.org/packages/kubo/) |

+| _other_ | [See Repology for the full list](https://repology.org/project/kubo/versions) | |

-##### Cross Compiling

+~~Snap~~ no longer supported ([#8688](https://github.com/ipfs/kubo/issues/8688))

-Compiling for a different platform is as simple as running:

+#### macOS

-```

-make build GOOS=myTargetOS GOARCH=myTargetArchitecture

-```

+| Manager | Install | Version |

+|---------|---------|---------|

+| Homebrew | `brew install ipfs` | [](https://formulae.brew.sh/formula/ipfs) |

+| MacPorts | `sudo port install ipfs` | [](https://ports.macports.org/port/ipfs/) |

+| Nix | `nix-env -i kubo` | [](https://search.nixos.org/packages?query=kubo) |

+| _other_ | [See Repology for the full list](https://repology.org/project/kubo/versions) | |

-#### Troubleshooting

+#### Windows

-- Separate [instructions are available for building on Windows](docs/windows.md).

-- `git` is required in order for `go get` to fetch all dependencies.

-- Package managers often contain out-of-date `golang` packages.

- Ensure that `go version` reports at least 1.10. See above for how to install go.

-- If you are interested in development, please install the development

-dependencies as well.

-- Shell command completions can be generated with one of the `ipfs commands completion` subcommands. Read [docs/command-completion.md](docs/command-completion.md) to learn more.

-- See the [misc folder](https://github.com/ipfs/kubo/tree/master/misc) for how to connect IPFS to systemd or whatever init system your distro uses.

+| Manager | Install | Version |

+|---------|---------|---------|

+| Scoop | `scoop install kubo` | [](https://scoop.sh/#/apps?q=kubo) |

+| _other_ | [See Repology for the full list](https://repology.org/project/kubo/versions) | |

-## Getting Started

+~~Chocolatey~~ no longer supported ([#9341](https://github.com/ipfs/kubo/issues/9341))

-### Usage

+## Documentation

-[](https://docs.ipfs.tech/how-to/command-line-quick-start/)

-[](https://docs.ipfs.tech/reference/kubo/cli/)

-

-To start using IPFS, you must first initialize IPFS's config files on your

-system, this is done with `ipfs init`. See `ipfs init --help` for information on

-the optional arguments it takes. After initialization is complete, you can use

-`ipfs mount`, `ipfs add` and any of the other commands to explore!

-

-### Some things to try

-

-Basic proof of 'ipfs working' locally:

-

- echo "hello world" > hello

- ipfs add hello

- # This should output a hash string that looks something like:

- # QmT78zSuBmuS4z925WZfrqQ1qHaJ56DQaTfyMUF7F8ff5o

- ipfs cat

-

-### HTTP/RPC clients

-

-For programmatic interaction with Kubo, see our [list of HTTP/RPC clients](docs/http-rpc-clients.md).

-

-### Troubleshooting

-

-If you have previously installed IPFS before and you are running into problems getting a newer version to work, try deleting (or backing up somewhere else) your IPFS config directory (~/.ipfs by default) and rerunning `ipfs init`. This will reinitialize the config file to its defaults and clear out the local datastore of any bad entries.

-

-Please direct general questions and help requests to our [forums](https://discuss.ipfs.tech).

-

-If you believe you've found a bug, check the [issues list](https://github.com/ipfs/kubo/issues) and, if you don't see your problem there, either come talk to us on [Matrix chat](https://docs.ipfs.tech/community/chat/), or file an issue of your own!

-

-## Packages

-

-See [IPFS in GO](https://docs.ipfs.tech/reference/go/api/) documentation.

+| Topic | Description |

+|-------|-------------|

+| [Configuration](docs/config.md) | All config options reference |

+| [Environment variables](docs/environment-variables.md) | Runtime settings via env vars |

+| [Experimental features](docs/experimental-features.md) | Opt-in features in development |

+| [HTTP Gateway](docs/gateway.md) | Path, subdomain, and trustless gateway setup |

+| [HTTP RPC clients](docs/http-rpc-clients.md) | Client libraries for Go, JS |

+| [Delegated routing](docs/delegated-routing.md) | Multi-router and HTTP routing |

+| [Metrics & monitoring](docs/metrics.md) | Prometheus metrics |

+| [Content blocking](docs/content-blocking.md) | Denylist for public nodes |

+| [Customizing](docs/customizing.md) | Unsure if use Plugins, Boxo, or fork? |

+| [Debug guide](docs/debug-guide.md) | CPU profiles, memory analysis, tracing |

+| [Changelogs](docs/changelogs/) | Release notes for each version |

+| [All documentation](https://github.com/ipfs/kubo/tree/master/docs) | Full list of docs |

## Development

-Some places to get you started on the codebase:

+See the [Developer Guide](docs/developer-guide.md) for build instructions, testing, and contribution workflow.

-- Main file: [./cmd/ipfs/main.go](https://github.com/ipfs/kubo/blob/master/cmd/ipfs/main.go)

-- CLI Commands: [./core/commands/](https://github.com/ipfs/kubo/tree/master/core/commands)

-- Bitswap (the data trading engine): [go-bitswap](https://github.com/ipfs/go-bitswap)

-- libp2p

- - libp2p: https://github.com/libp2p/go-libp2p

- - DHT: https://github.com/libp2p/go-libp2p-kad-dht

-- [IPFS : The `Add` command demystified](https://github.com/ipfs/kubo/tree/master/docs/add-code-flow.md)

+## Getting Help

-### Map of Implemented Subsystems

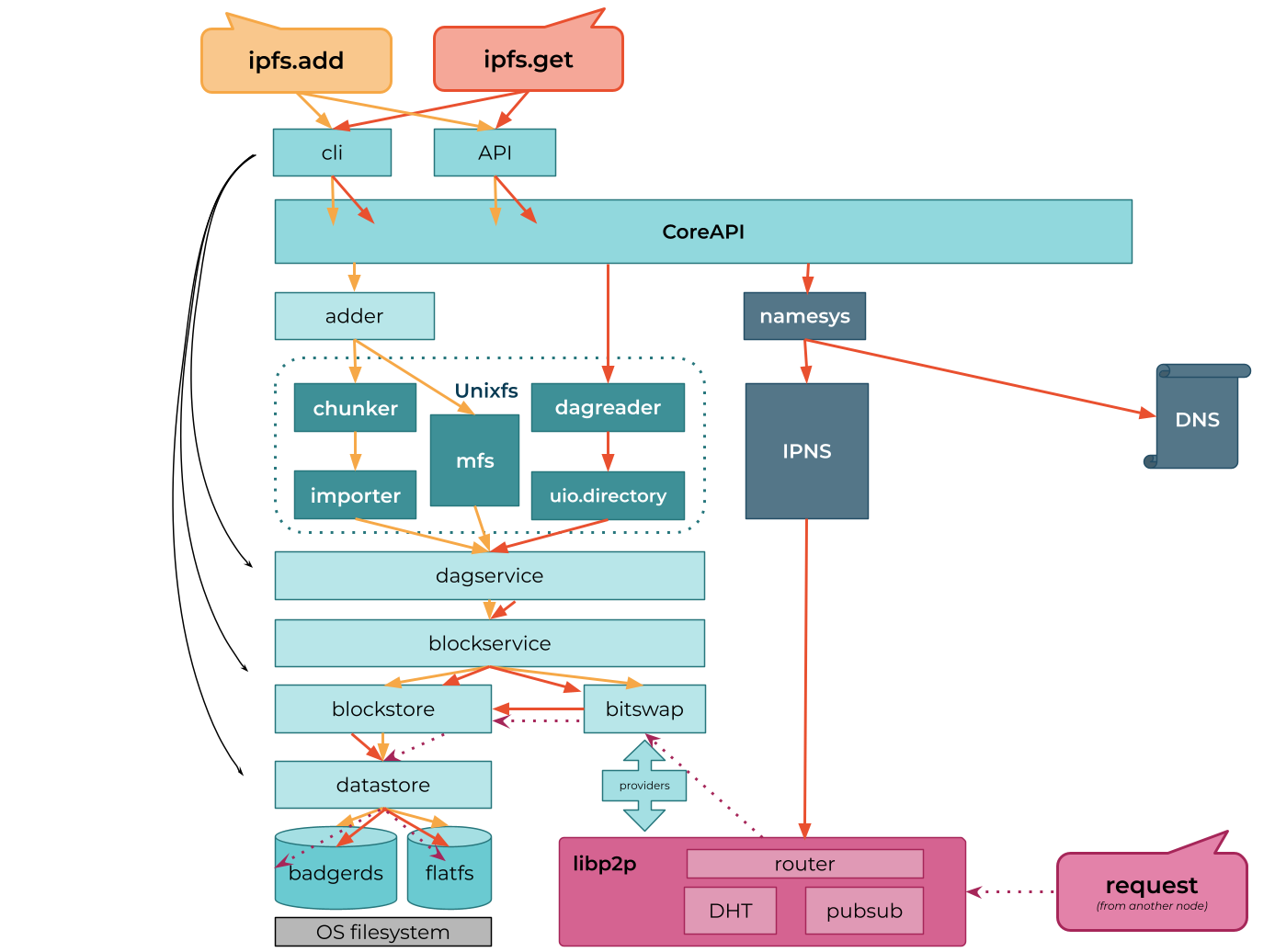

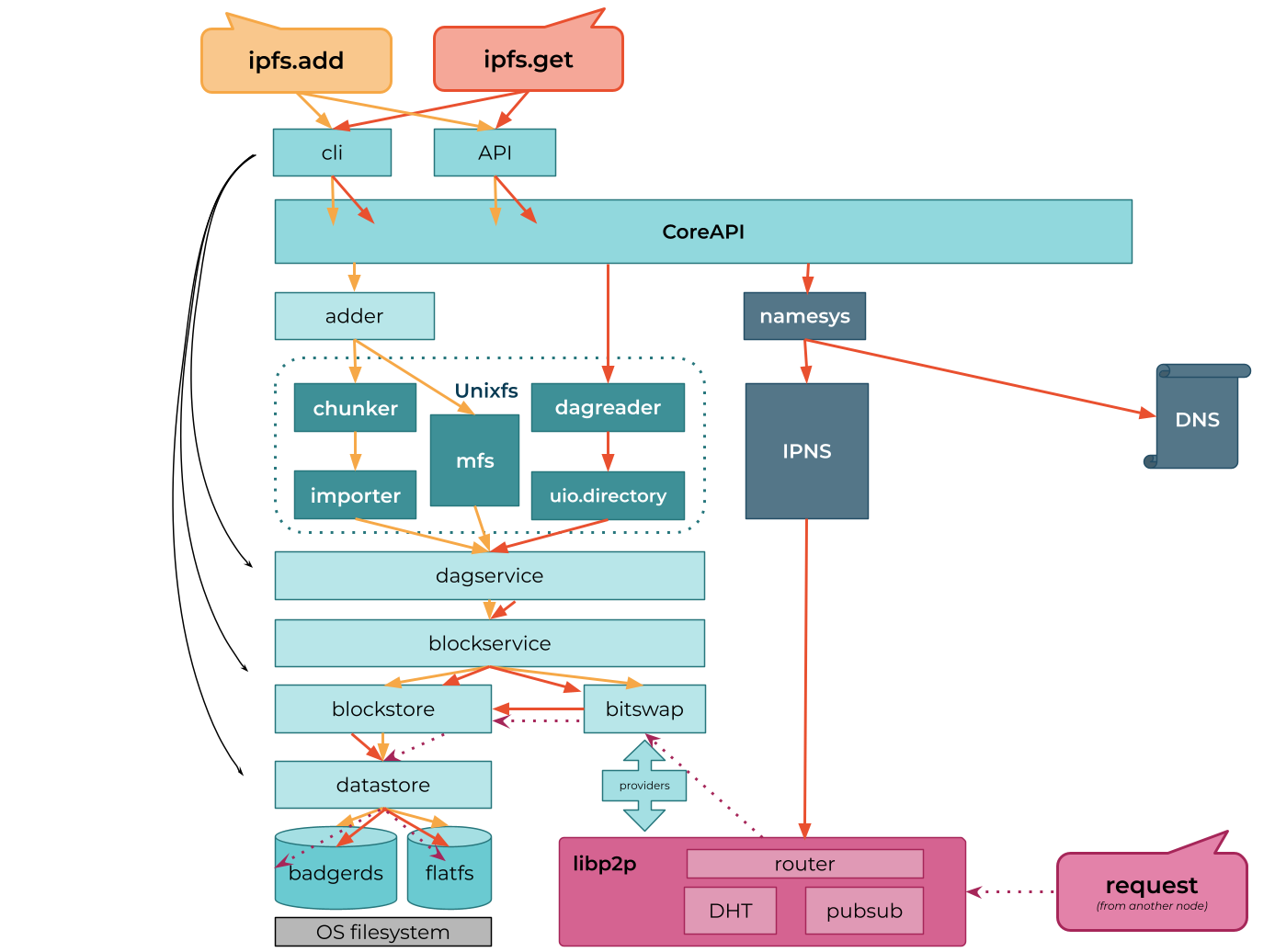

-**WIP**: This is a high-level architecture diagram of the various sub-systems of this specific implementation. To be updated with how they interact. Anyone who has suggestions is welcome to comment [here](https://docs.google.com/drawings/d/1OVpBT2q-NtSJqlPX3buvjYhOnWfdzb85YEsM_njesME/edit) on how we can improve this!

-

-

-

-- [ArchLinux](#arch-linux)

-- [Gentoo Linux](#gentoo-linux)

-- [Nix](#nix-linux)

-- [Solus](#solus)

-- [openSUSE](#opensuse)

-- [Guix](#guix)

-- [Snap](#snap)

-- [Ubuntu PPA](#ubuntu-ppa)

-

-#### Arch Linux

-

-[](https://wiki.archlinux.org/title/IPFS)

-

-```bash

-# pacman -S kubo

-```

-

-[](https://aur.archlinux.org/packages/kubo/)

-

-#### Gentoo Linux

-

-https://wiki.gentoo.org/wiki/Kubo

-

-```bash